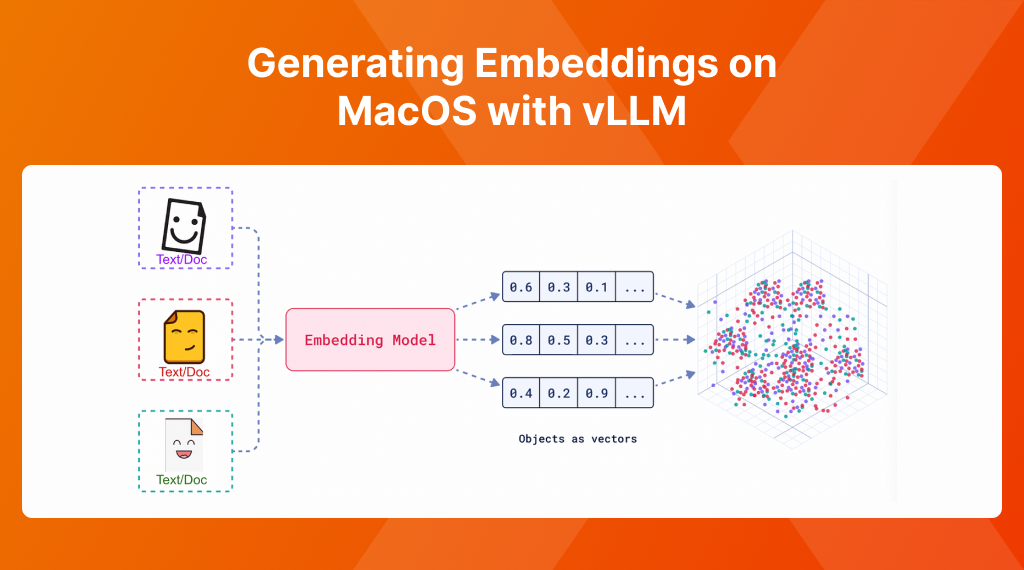

Creating Embeddings with vLLM on MacOS

Learn how to generate embeddings with vLLM on MacOS

What was thought to be an easy journey seemed to be quite "interesting", with MacOS not being the first candidate for vLLM. As each time I was creating running my embeddings I got "ValueError: Model architecture ['...'] failed to be inspected. Please check the logs for more details" and then some Triton issues.

Simply debugging was also not working initially, as when running collect_env shipped by PyTorch (python -m torch.utils.collect_env), I received error AttributeError: 'NoneType' object has no attribute 'splitlines'. Luckily, a fix is already in the works.

The solution appeared to be to install vLLM manually by its source code, so let's do that!

Installing vLLM on Mac

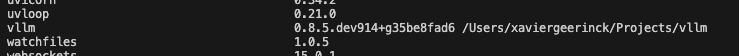

Luckily for us, building vLLM from source is not too difficult and is well explained. We just have to make sure correctly follow the steps as written (as using uv pip doesn't work either).

uv venv --python 3.12 --seed

source .venv/bin/activate

git clone https://github.com/vllm-project/vllm.git

cd vllm

pip install -r requirements/cpu.txt

pip install -e .

Doing so, results in a correctly installed vLLM:

Running a Demo Embedding

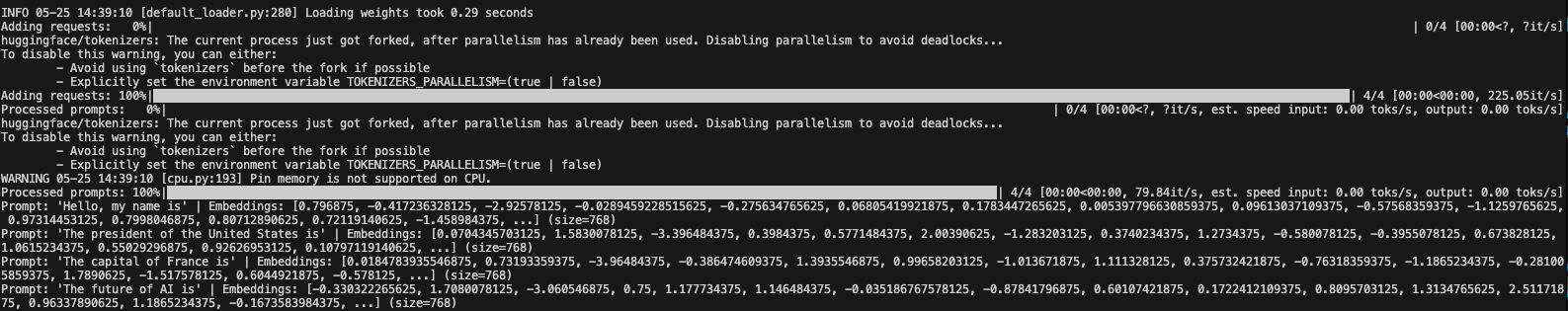

Now we can finally run a Demo Embedding, so let's create a simply script that prints the embedding:

from vllm import LLM

# vLLM Configuration

VLLM_EMBEDDING_MODEL = "nomic-ai/nomic-embed-text-v1.5"

# Sample prompts.

prompts = [

"Hello, my name is",

"The future of AI is",

]

# Create an LLM.

# You should pass task="embed" for embedding models

model = LLM(

model=VLLM_EMBEDDING_MODEL,

task="embed",

enforce_eager=True,

trust_remote_code=True,

)

# Generate embedding. The output is a list of EmbeddingRequestOutputs.

outputs = model.embed(prompts)

# Print the outputs.

for prompt, output in zip(prompts, outputs):

embeds = output.outputs.embedding

embeds_trimmed = ((str(embeds[:16])[:-1] + ", ...]") if len(embeds) > 16 else embeds)

print(f"Prompt: {prompt!r} | "

f"Embeddings: {embeds_trimmed} (size={len(embeds)})")

And there we go! Embeddings are being created and we can continue with any vector related search.

Comments ()