Azure Project Bonsai - Physical CartPole Project

In my previous post I revisited the Bonsai platform. Now it's time to actually start preparing to put it into production! (this will become more clearer over time 😉) To start off with a spoiler: Project Bonsai - It's impressive! 😎

Everything in this article is the result of around 100 hours of work! Starting entirely from scratch with the following tasks that were executed:

- Acquiring the hardware

- Creating own Firmware for the hardware

- Custom OLED screen software

- Motor driver

- Motor Encoder handler

- Angular Displacement Encoder handler

- Serial communication

- …

- Training a Bonsai Brain

- Deploying a Bonsai Brain

- Creating the Serial Interface to Bonsai Brain

- Note: The Bonsai Docker Container is running on WSL 2, while Serial is not.

But all of this made it worth it, producing the following:

So let's kick-off where we ended last time, after training a brain to keep a CartPole balanced.

Brain (Model) Export

Creating a Docker Container

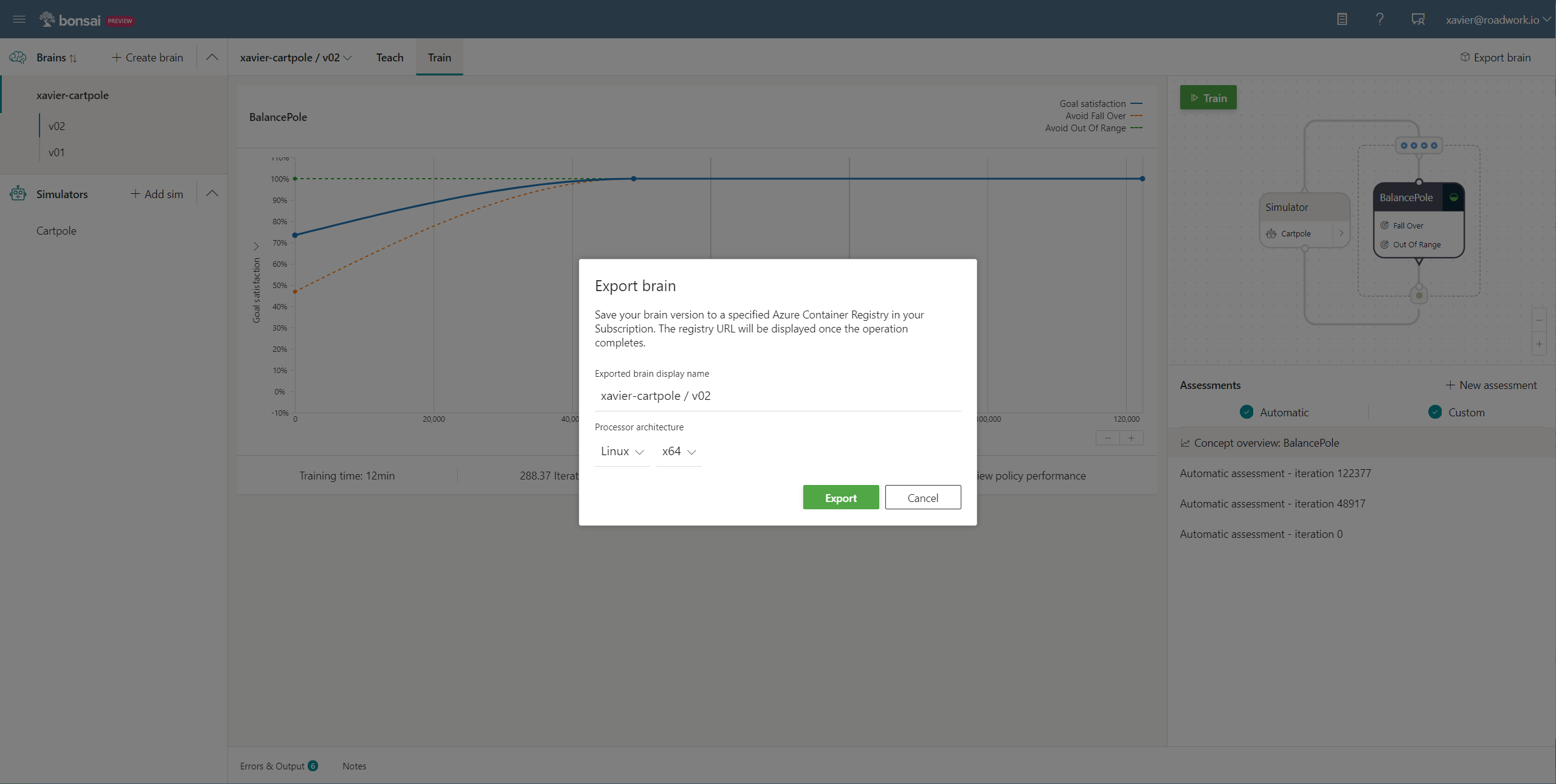

We now have a model trained to our specifications and as we can see, it performs quite well in the simulator!

💡 It's important to note that a simulator != the real world! This means that certain aspects such as motor stutter, correct duty cycle measurement, … are not going to act as we expect them to in the simulator!

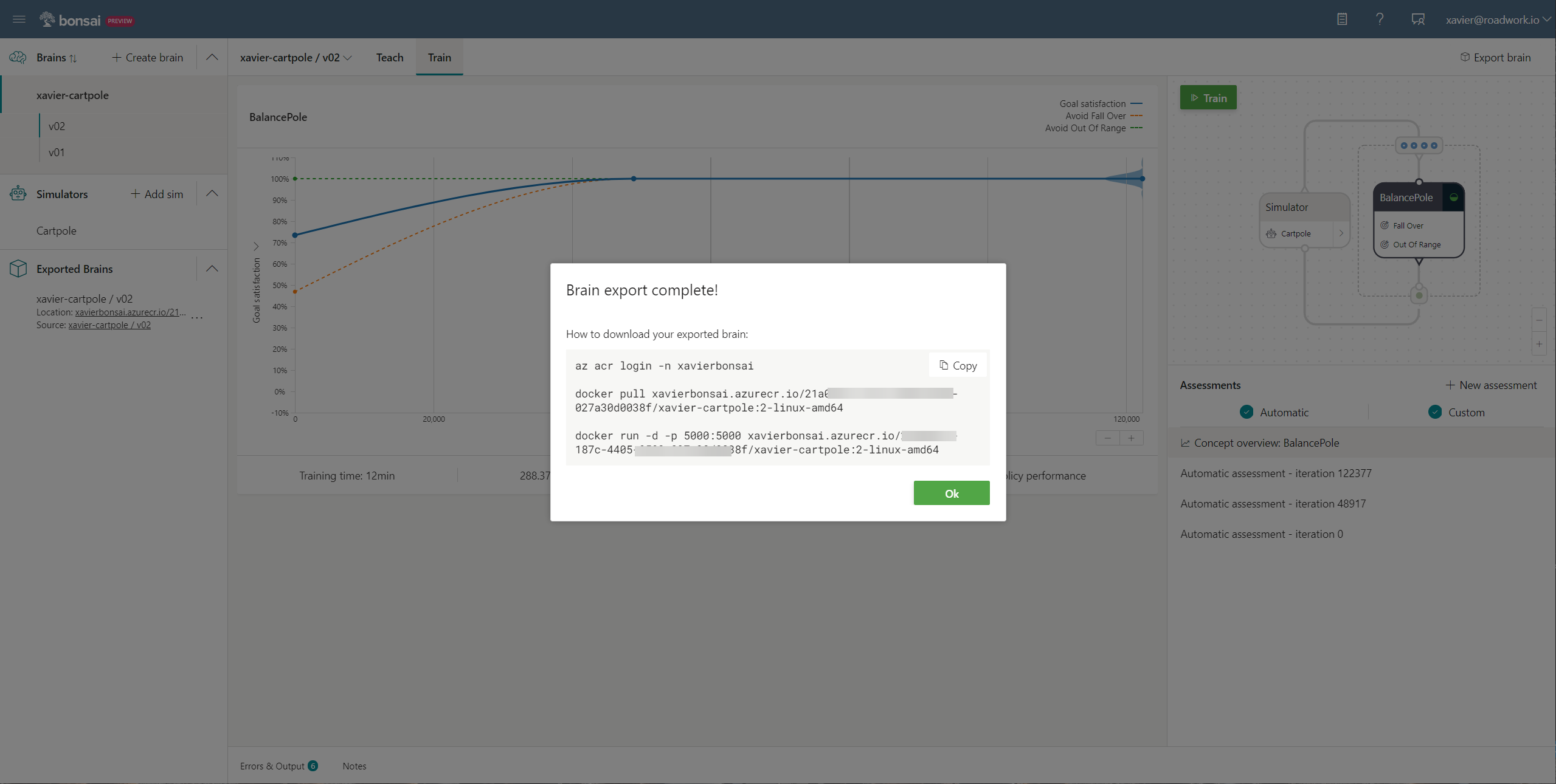

Since we are satisfied with the results, we can now export our brain. By exporting our brain, we are basically creating a Docker container (automatically through the Bonsai Platform) and upload it on an Azure Container Registry.

Once this upload is finished, we will be given the ability to pull it locally through a few simple commands.

Diving deeper in the Docker Container

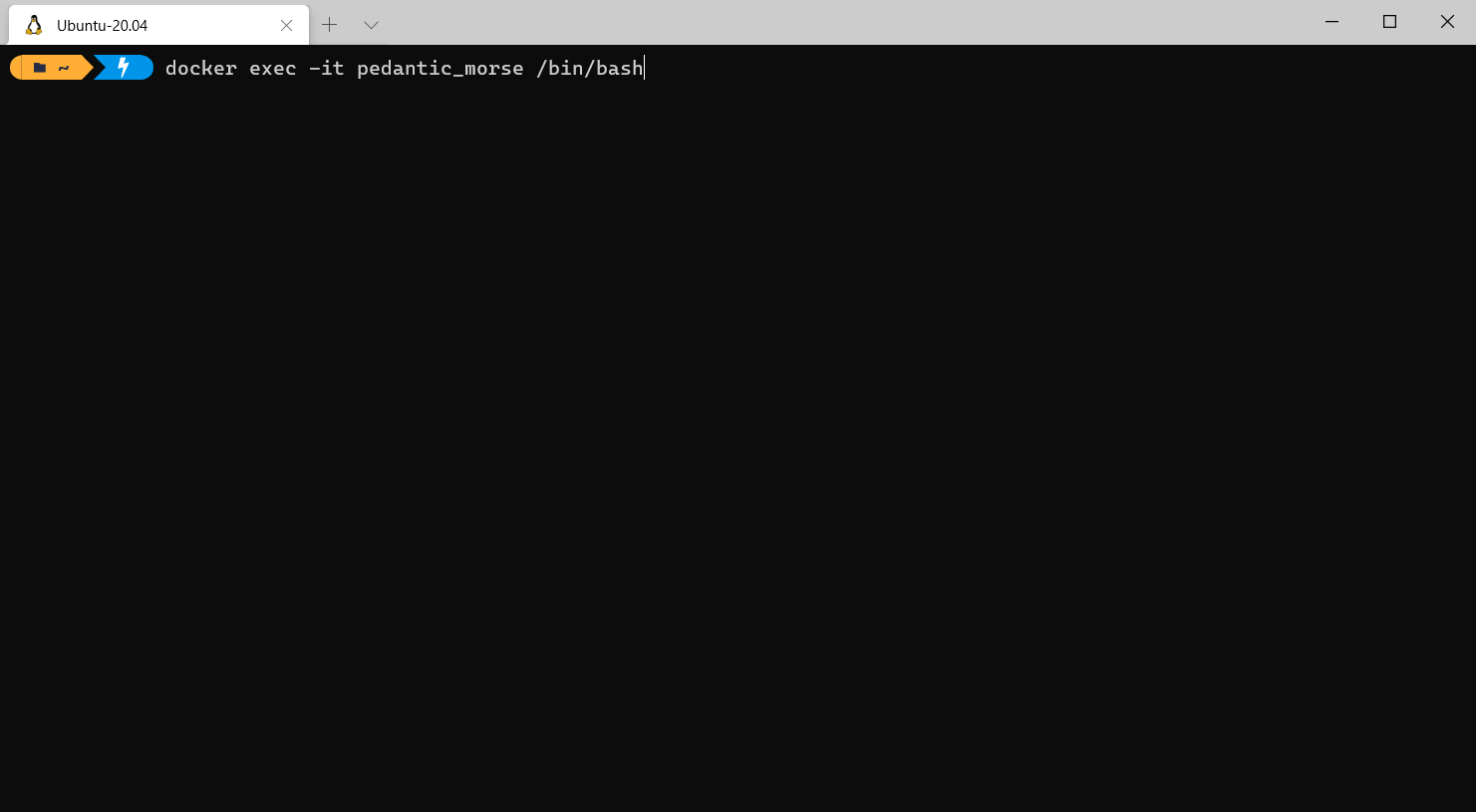

Of course we do not want to run this blindly and go a bit more in-depth on what this container actually contains! So let's boot it up and run a quick exec to get into it:

docker exec -it your_container /bin/bash

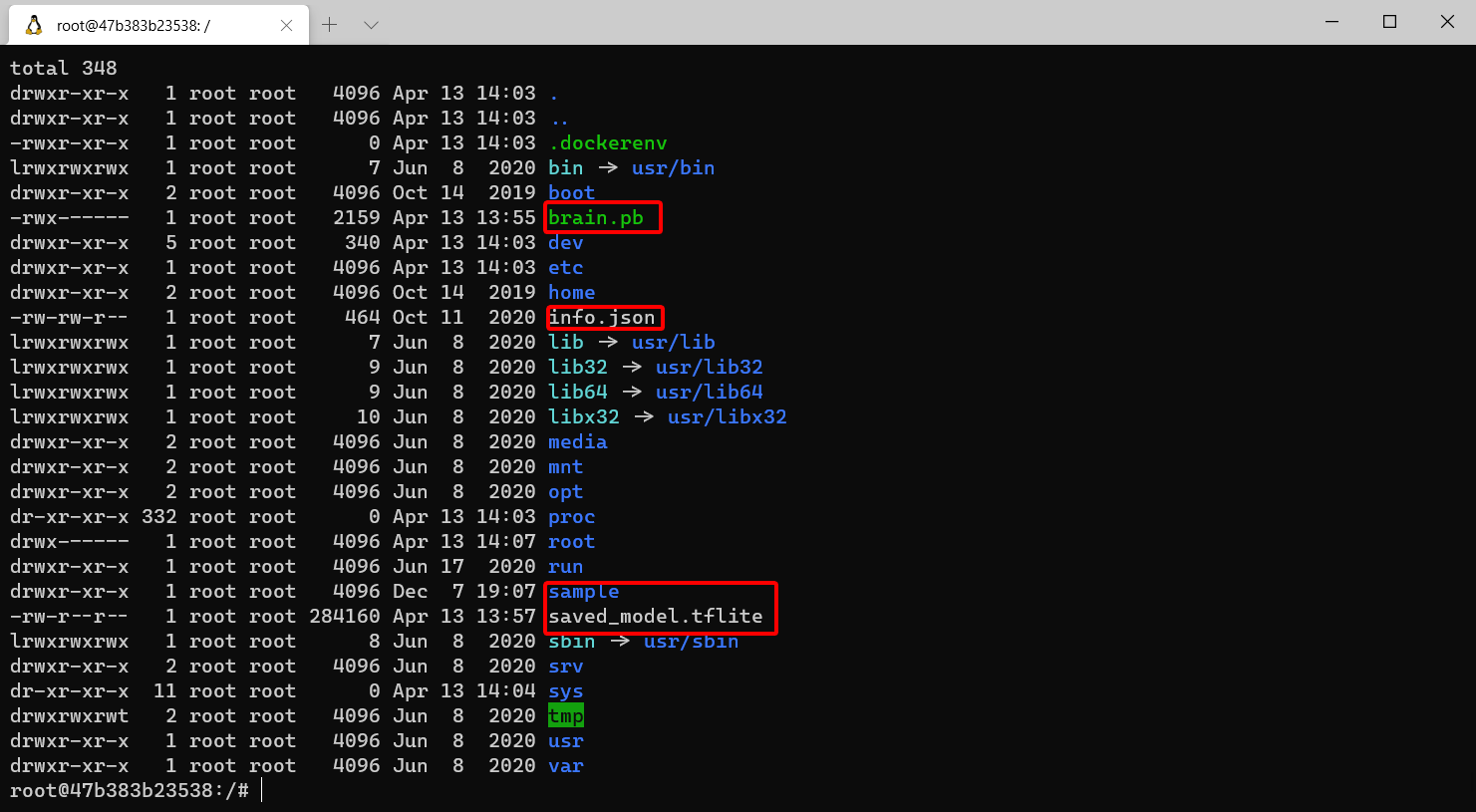

When comparing the root folder structure with ls -la to a normal Linux image, we can instantly see some extra folders and files that are not there by default!

Dissecting these files:

- info.json: Contains some information about Project Bonsai their inference API

- brain.pb: Not verified, but after a dissassembly, it seems that this contains the Protobuf definitions to interact with the created model in an easy way. This would make sense seeing that we programmed everything in Inkling and want to interact with the API now in an easy way.

- saved_model.tflite: Our created model saved as a Tensorflow Lite format making it so it will run fast on smaller devices!

- sample/ Contains some demo code, but seems to be a leftover, so we can discard this.

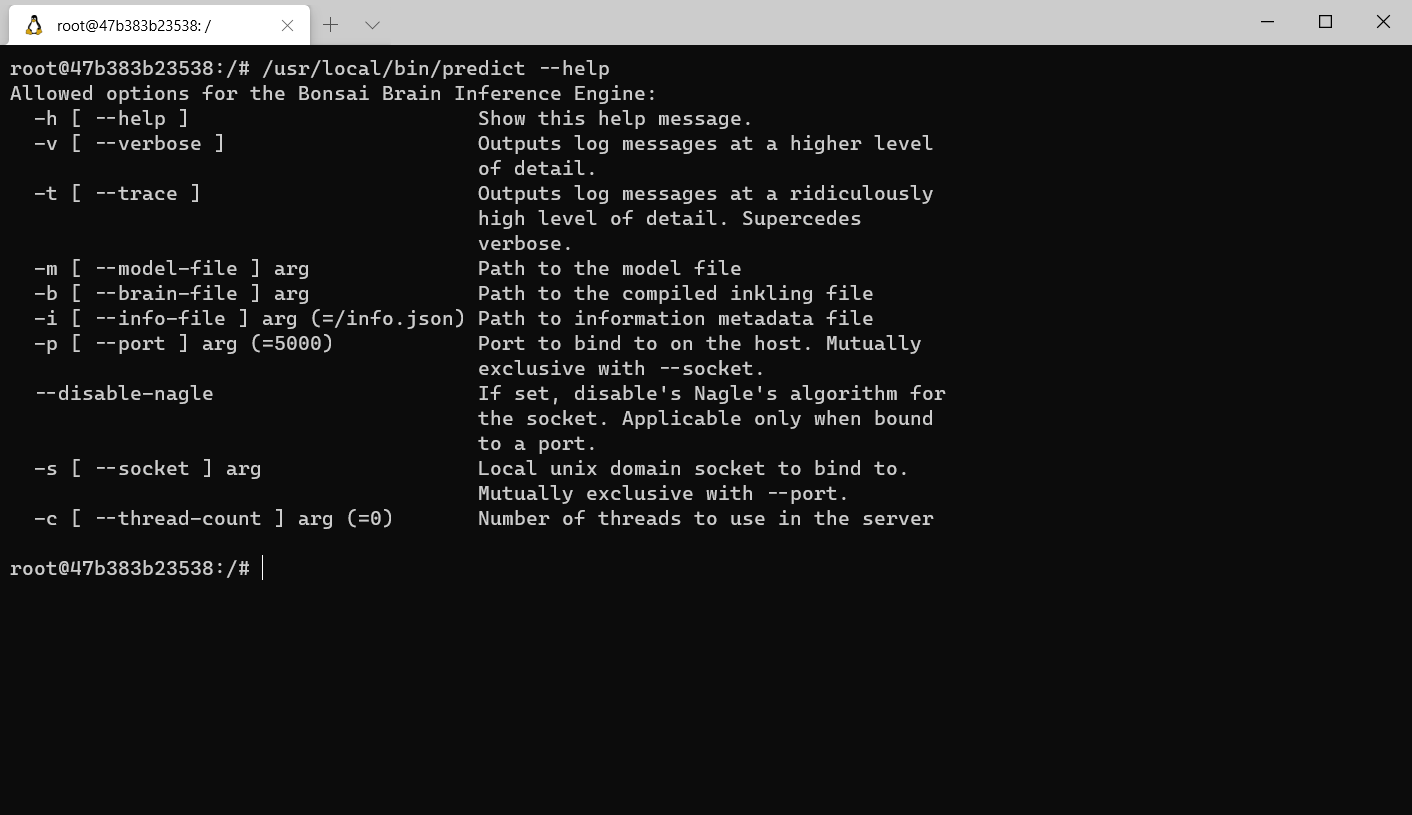

All of the above is interesting, but what is our container actually executing? When we look at the process, we can see a process running named /usr/local/bin/predict.

💡 I'd love to go more in-depth on this one, but it seems to be C code, so not going to bother decompiling and analyzing it. 🙂

Calling our Brain (Model) API

Now we know what is running, we can see that a HTTP server is being started on port 5000 which loads in our brain file. Documentation for this is being exposed on the url: http://localhost:5000/v1/doc/index.html in a Swagger interface.

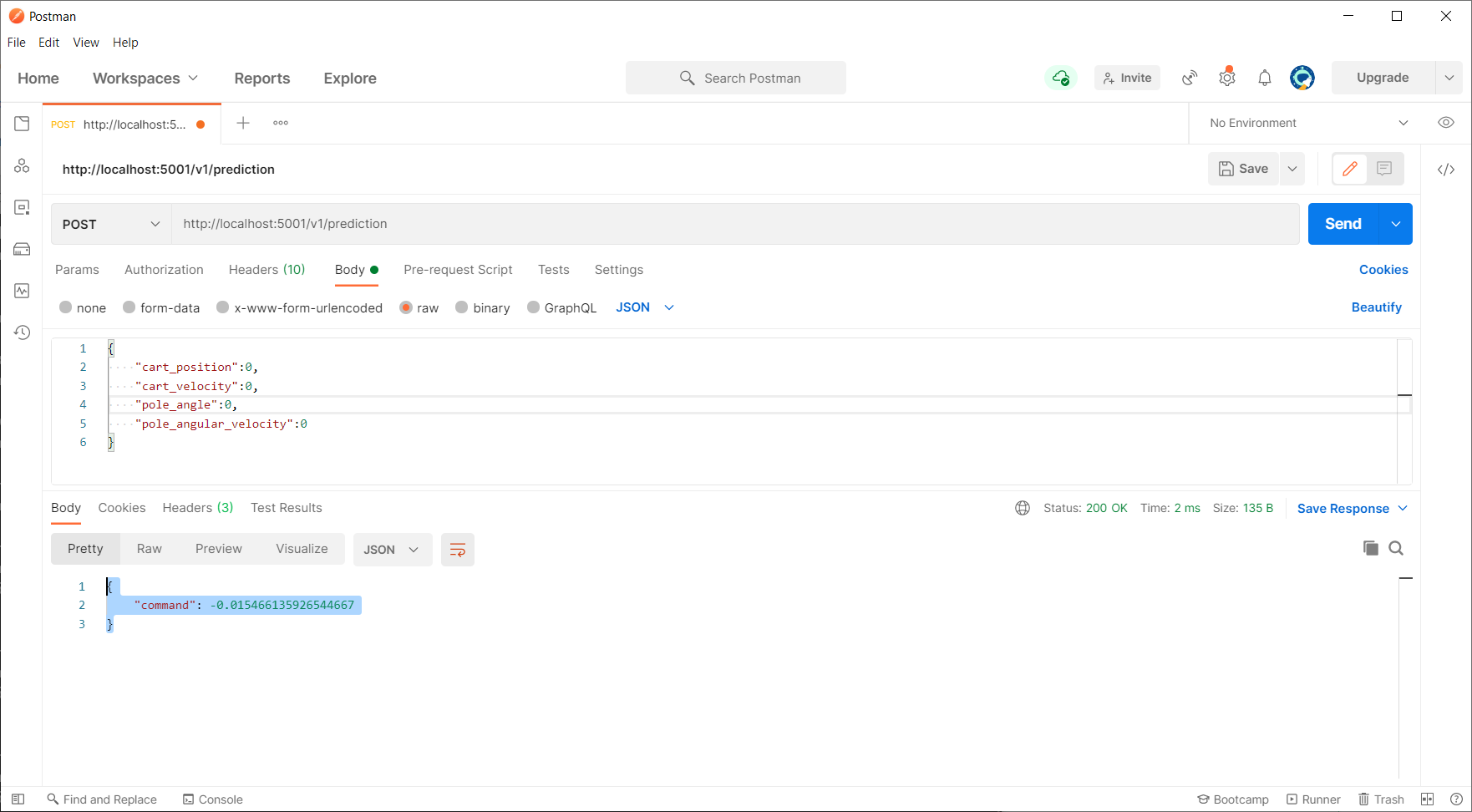

There we can see that we can call our API by executing a POST or GET call to the endpoint http://localhost:5000/v1/prediction with our JSON body. In more detail:

# Full Example

POST|GET http://localhost:5000/v1/prediction

{

"cart_position": 0,

"cart_velocity": 0,

"pole_angle": 0,

"pole_angular_velocity": 0

}

# Curl Example

curl -X POST "http://localhost:5000/v1/prediction" -H "accept: application/json" -H "Content-Type: application/json" -d "{\"cart_position\":0,\"cart_velocity\":0,\"pole_angle\":0,\"pole_angular_velocity\":0}"

Which will return the command (result) to be executed by our Agent:

{

"command": -0.015466135926544667

}

When running this through Postman we get:

Running a Real CartPole Example

So everything is set for us to run this on a physical CartPole example! For that I have build my own Firmware on a premade CartPole that I bought on Aliexpress. This Firmware sends observations and takes the commands as actions to drive the motor!

⚠️ I have built my own Hardware Firmware to interact with the CartPole over Serial, but will not be covering that in this post!

To communicate over the Serial Interface, I wrote a quick Serial interaction program in Javascript that sends the received Serial Observations towards the Bonsai Container and writes back the received command over Serial.

// Bonsai

const req = await fetch('http://<YOUR_IP>:5001/v1/prediction', {

method: 'POST',

body: JSON.stringify({

"cart_position": x,

"cart_velocity": v,

"pole_angle": theta,

"pole_angular_velocity": omega

})

})

const res = await req.json();

const cmd = res.command;

// We utilize write since it converts floats so we can send it over UART

await arduino.writeFloat(cmd);

All of this gets printed to console in lines such as the ones below. Showing an impressive 35ms total roundtrip! Of these 35ms, 25ms are a delay in the main loop (for motor stability), giving an average delay of 10ms for Bonsai inference roundtrip over the HTTP endpoint!

# n (idx), x (cart position), v (cart velocity), θ (pole angle), ω (pole velocity), a (brain action), time previous, time current, difference

1945;0.018535396084189415;0.3903769850730896;0.006132812704890966;-0.7007526755332947;0.19268926978111267;1618763600647;1618763600682;35;

If I now run this, I get a CartPole that is being steered by the Bonsai Brain! Resulting in the following video:

Lessons and Conclusion

All of the above did not happen overnight as mentioned earlier and took around 100 hours to complete.

Lessons / Takeaways

Since I am not a hardware programmer by profession, it was quite interesting to see how I could interact with all of this. Some of my main lessons are:

- Do not reinvent the wheel, utilize good frameworks such as Arduino to help you

- Next time when acquiring hardware, check the flash memory size… 32Kb is definitely not enough anymore for larger frameworks such as RTOS

- Motors are interesting to steer, PWM steering is not that easy initially and you should start with understanding how clocks work

- Here I utilized an STM32F103C8 module with the internal clock TIM3 to steer the motor!

- Start out with smaller projects dedicated for sub-parts (e.g. motor driver, oled screen steering, …)

- Think about the numbers! If your pole goes left and negative, make sure that when the cart goes left it's also along the negative axis… (I swapped them which lost me 15h 😅)

Conclusion

Finally, I would like to say that I am impressed by what the Bonsai Platform has to offer! Integrating this was a breeze through the delivered HTTP interface, and I was not limited at all by the performance! From what I could tell (I should measure this) inference took only 1ms and the rest was overhead / delays I introduced to make all of this work. I am very happy they made the choice to go the Protobuf route for this! (interesting fact, I actually did this myself through Roadwork-RL with Protobuf, and it's a pain to do so!)

Comments ()