Unity ML-Agents - An Introduction

Seeing the rising popularity of the ML-Agents framework and them having achieved Release 2 - congratulations! - I decided to start by running one of the examples and documenting it.

Installing Unity

First we have to install Unity, for that we simply can go to https://unity3d.com/get-unity/download and click the Download Unity Hub link which will let us install Unity Hub. Once it's installed, open it up.

Activating Unity

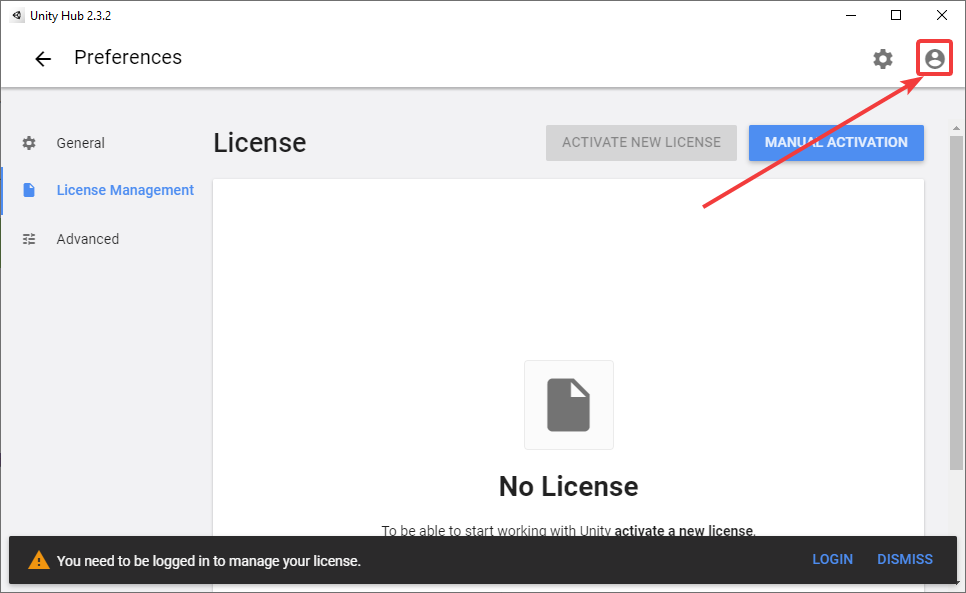

When Unity Hub opens, it will complain about not having a license. This is since we are not signed in, so go ahead and sign in by clicking on the icon shown below.

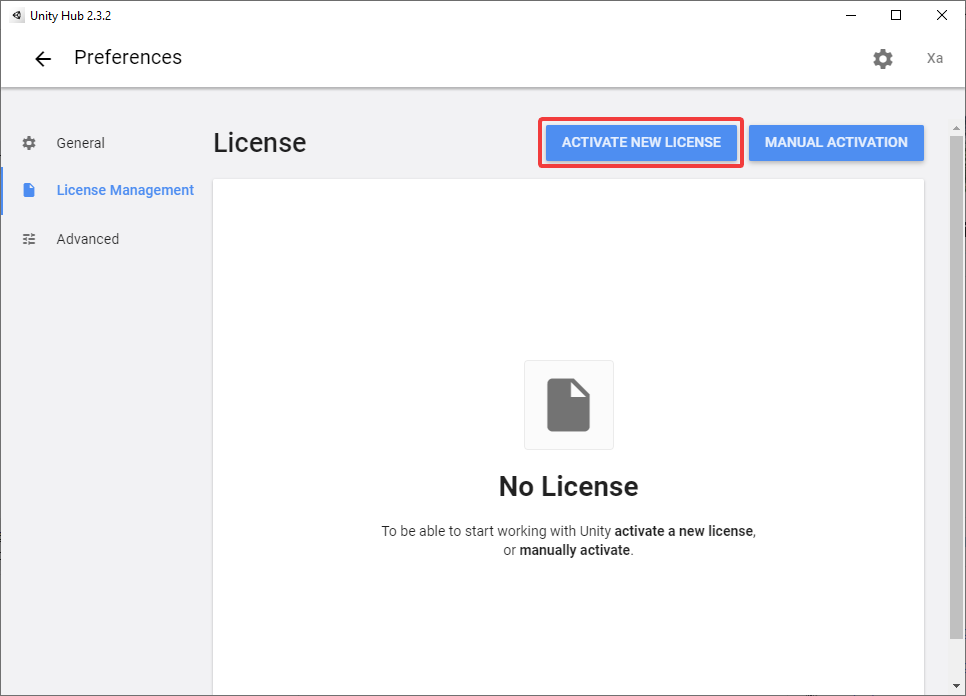

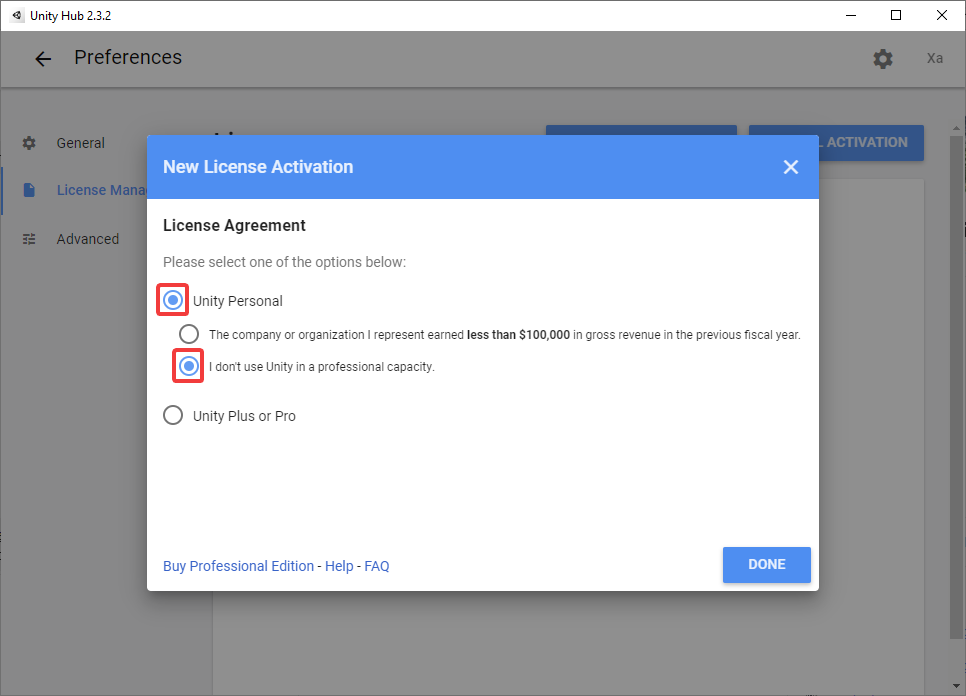

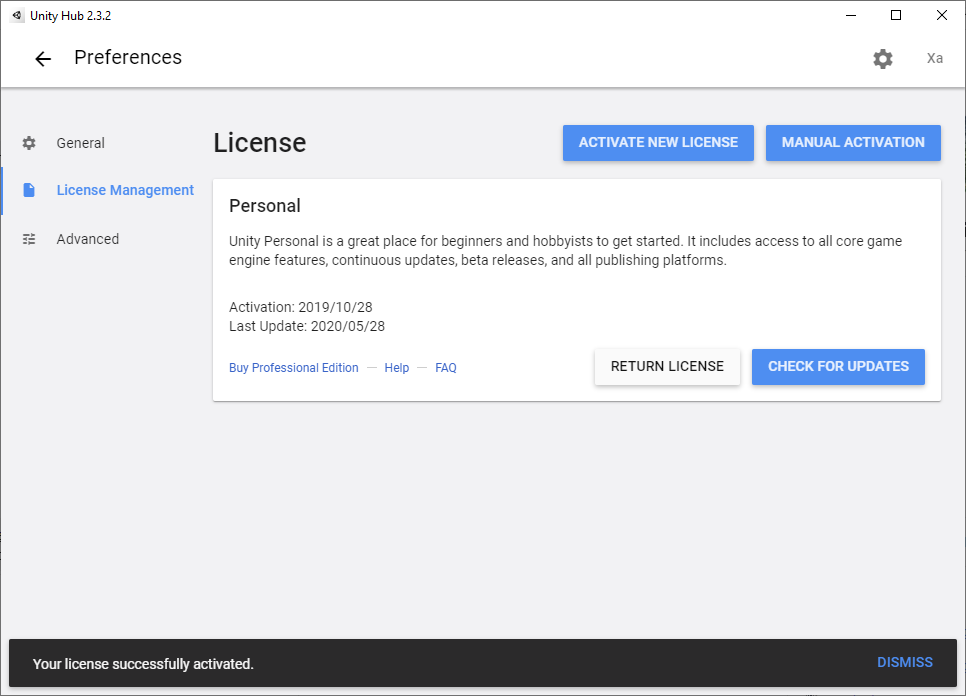

Once signed in, go back to the License Management screen and click Active New License. Here we can configure how we want to utilize Unity. In my case this is for Personal use only, so I selected the options as shown in the pictures below.

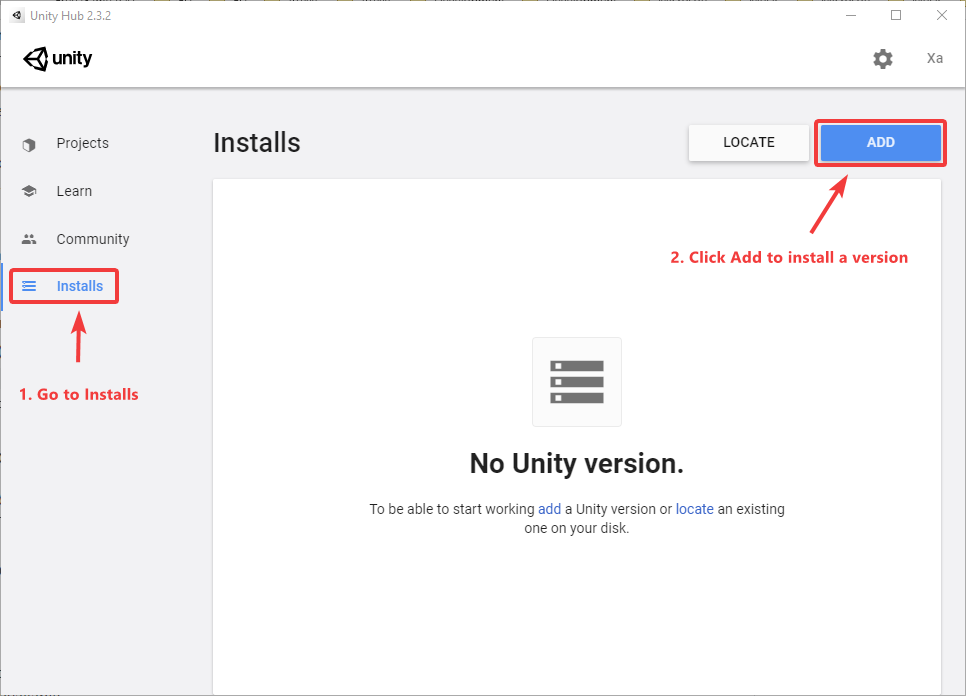

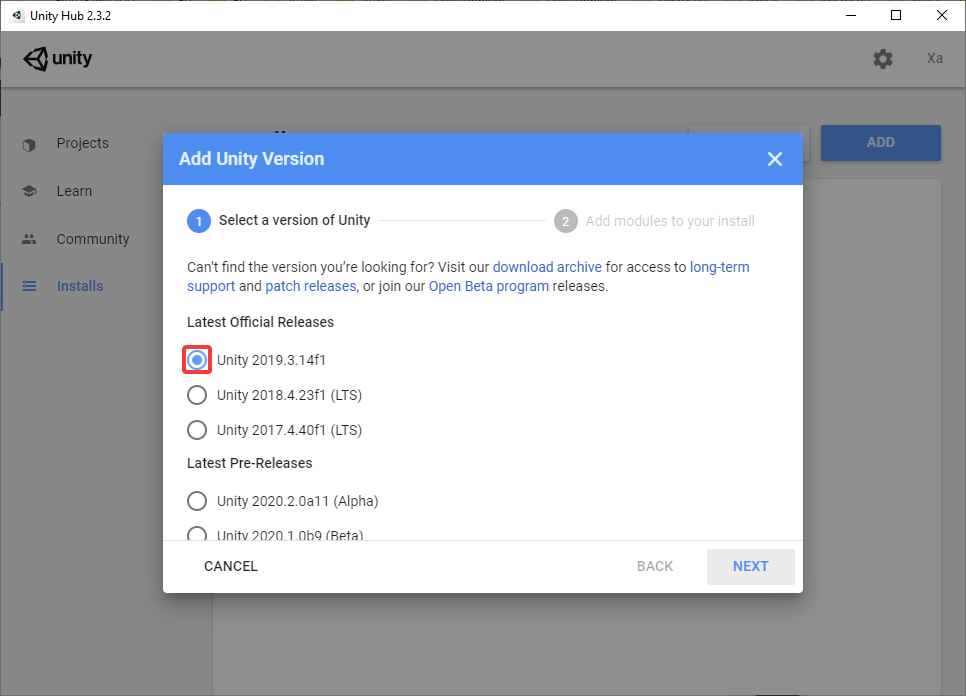

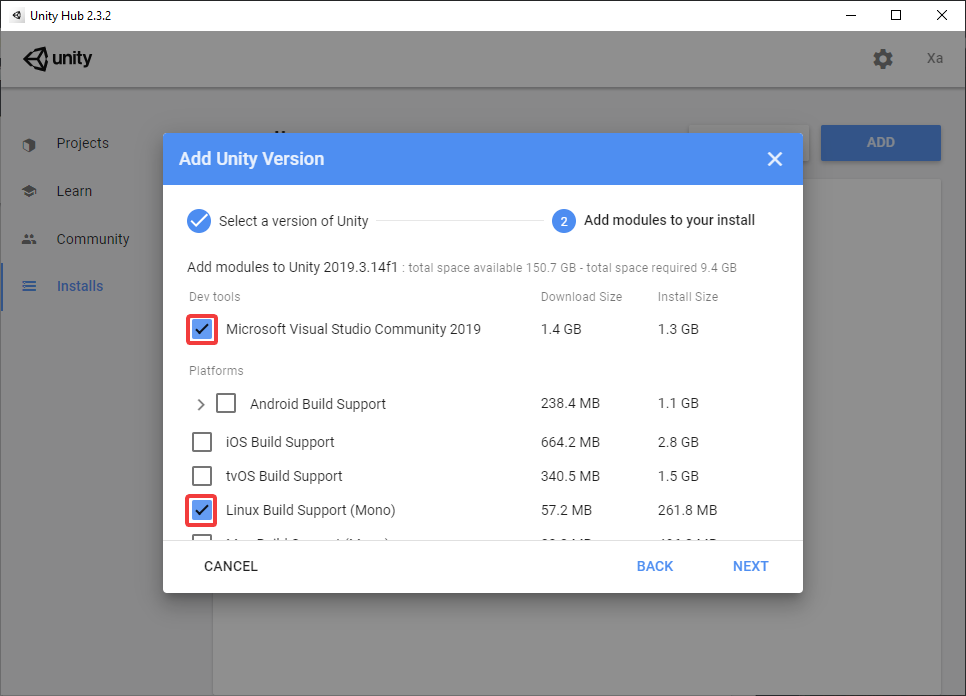

Installing a Unity Version

Now Unity is activated, we can continue by actually installing a version. To do so, go to the Installs section and click Add on the right top. This will open up the version selection pane where I selected the latest LTS version. In the next step, I also added Linux Build Support which is required for the Roadwork RL project 😊

Adding ML-Agents to Unity

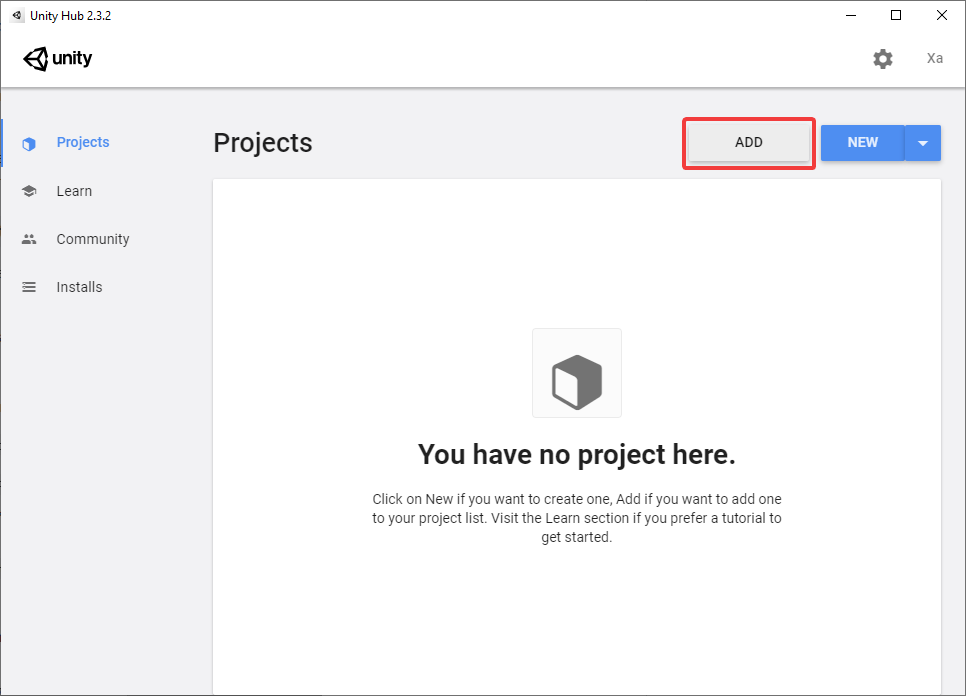

After waiting for the download and installation of Unity to be done, we can finally get started towards installing ML-Agents. I decided to utilize the source code itself, so I went ahead to download the latest release from GitHub: https://github.com/Unity-Technologies/ml-agents.

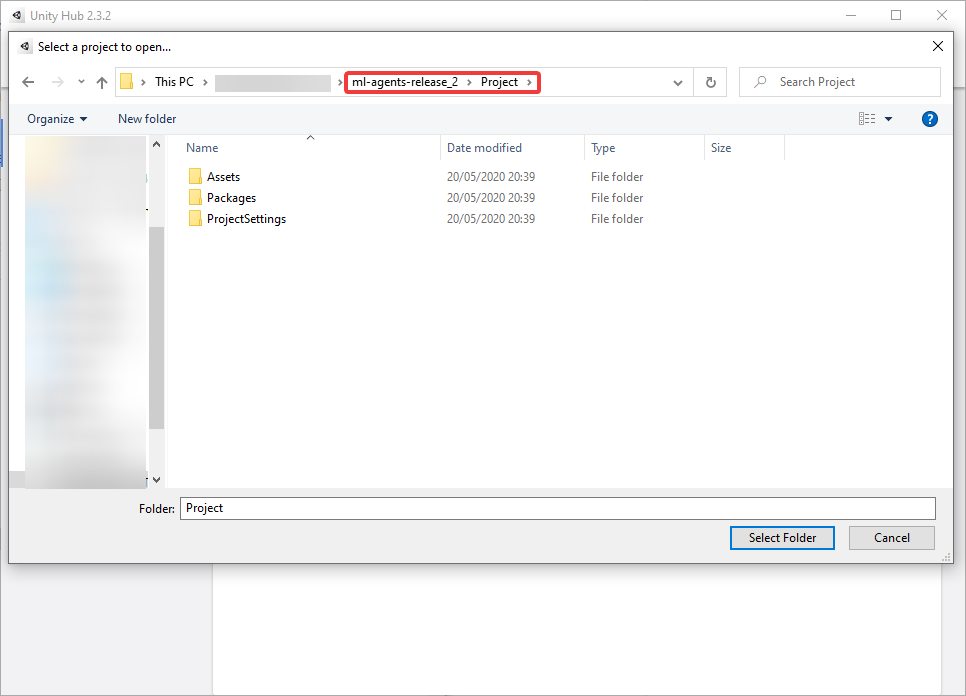

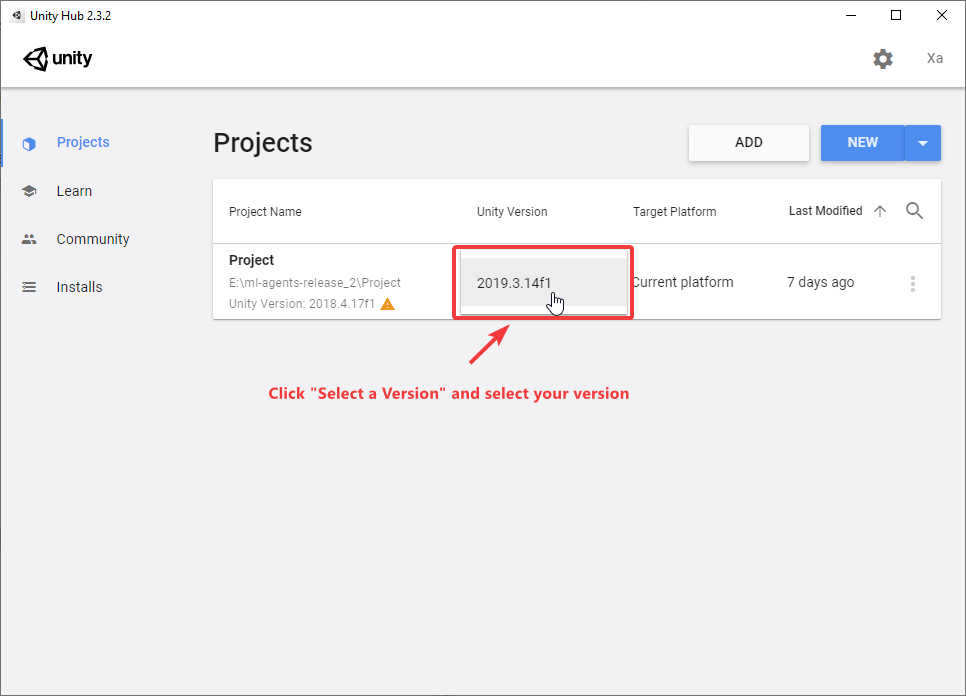

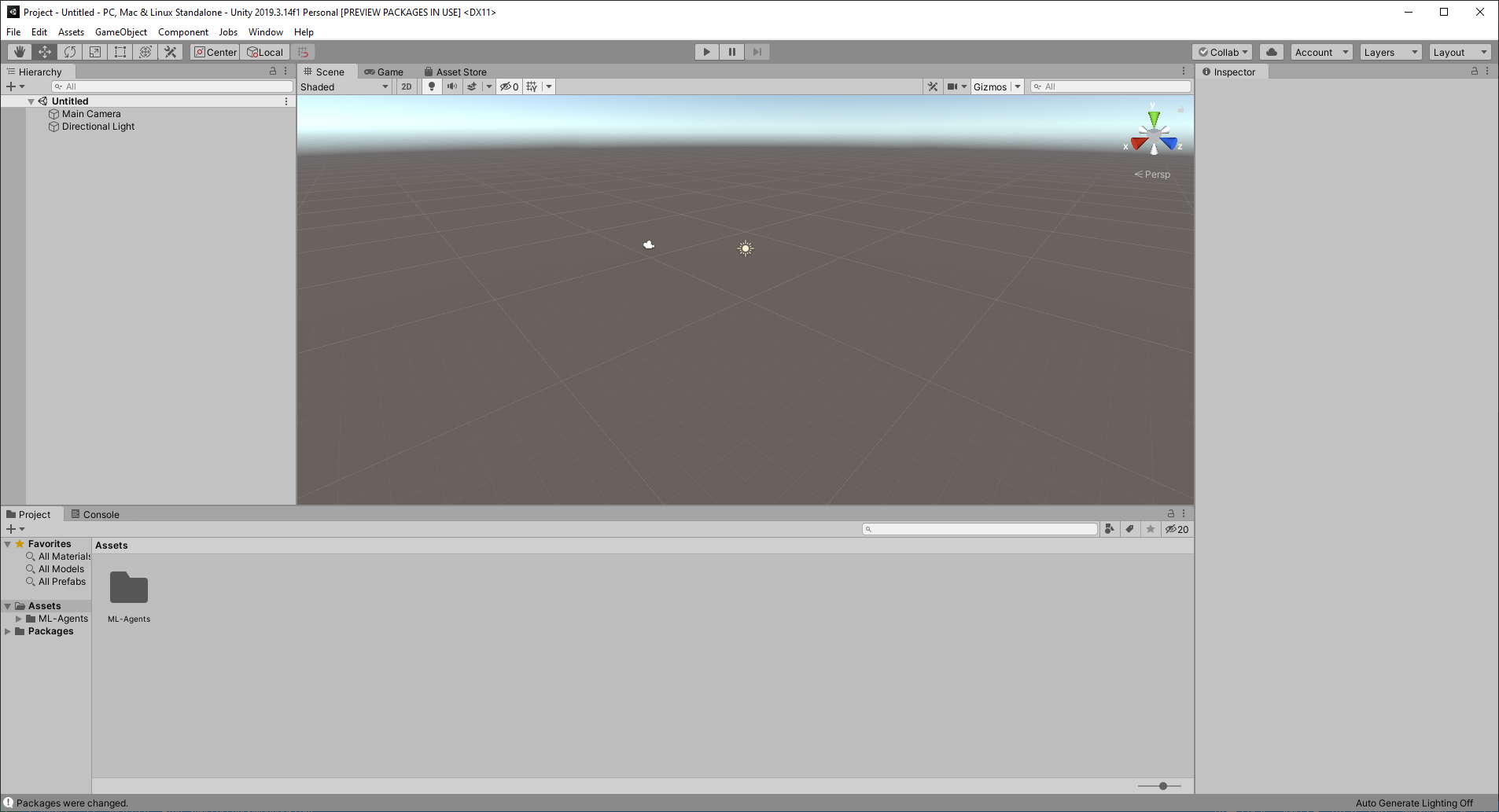

Once downloaded, we extract the content and go back to the Unity Hub. Here we can select Add under projects and add the ml-agents Project folder (which is situated in <ml_agents_root>/Project). After selecting the Project folder, we need to select our Unity Version (which will require an upgrade) and click the added project to finally open up the Unity Editor.

Note: You can also utilize the Package Manager to install ml-agents. To do so, simply open the editor and go toWindow -> Package Managerand search forml-agentsand click install (do enable thePreview Packagesin theAdvanceddropdown to find it).

Running an ML-Agents Example

To run an example, we can now open up the Editor by clicking the installed Project folder. Here we can then go to the different examples pre-included in the ML-Agents release. So let's explore a bit of what we can see here.

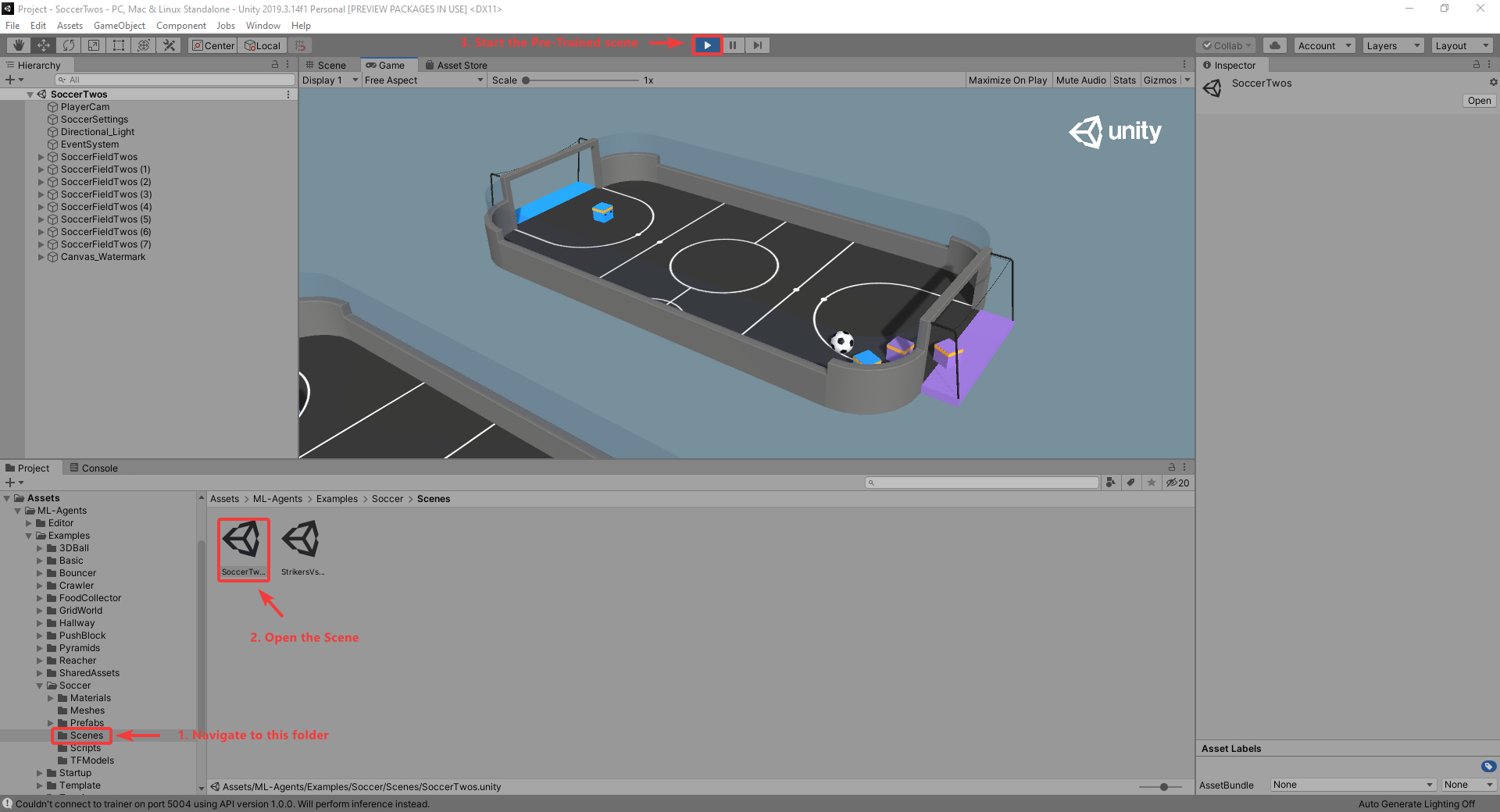

As an example for this blog post, feel free to go to the ML-Agents/Examples/Soccer/Scenes folder and clicking the scene included. When we click the Play button, we will now see a soccer match being played by a trained agent.

Exploring ML-Agents

Let's as well dive a bit deeper into Unity and ML-Agents and explore what we can do with it.

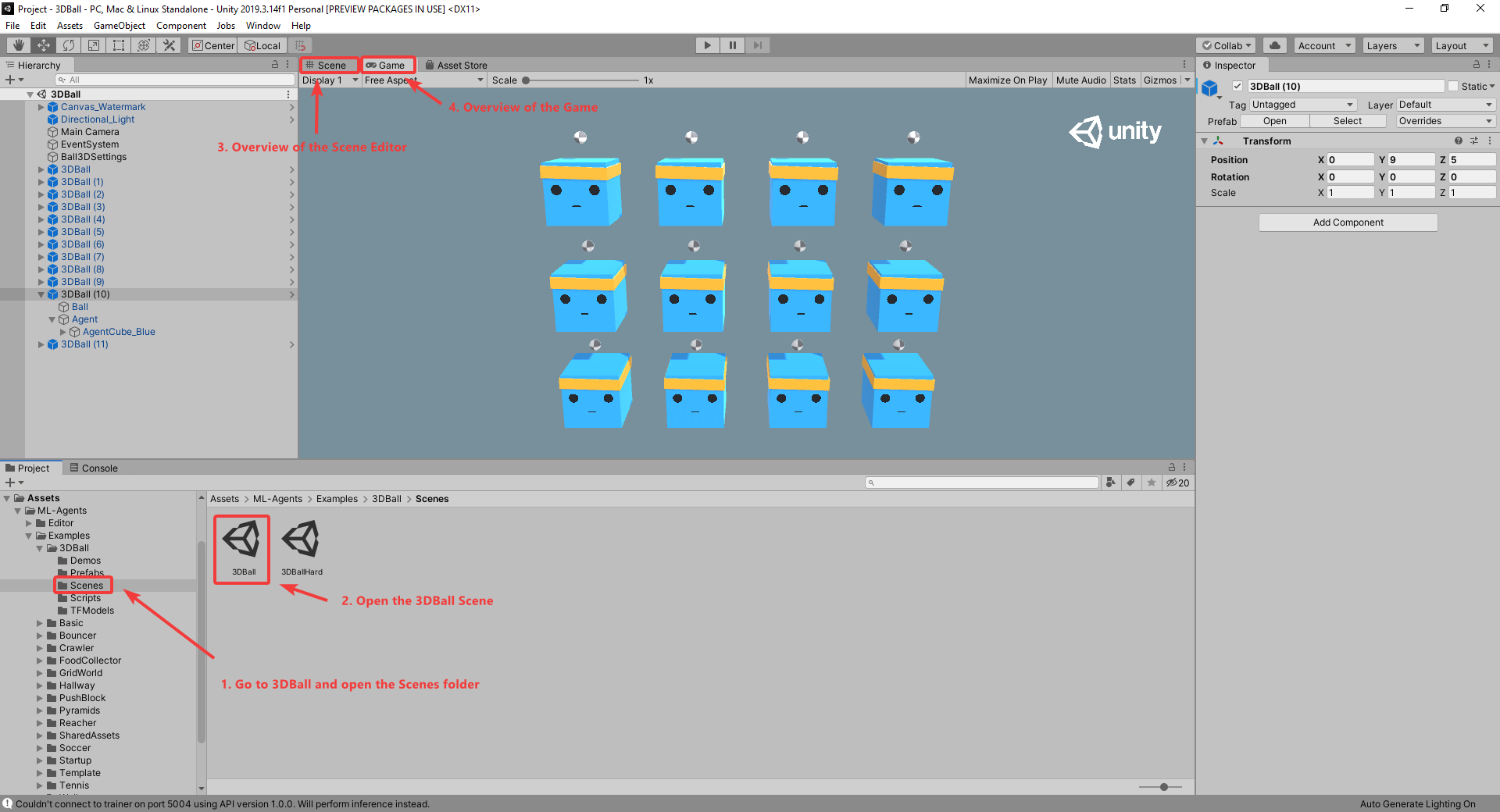

When we open up the 3DBall environment, we can go to the Game Tab to see an overview of how the game looks like when starting it. In the Scene Tab we can model this and orchestrate how the objects are placed and looked at.

Since we are working with Reinforcement Learning we of course have to find out what our environment is and what the actions are we can take.

In the example of the 3DBall environment, the goal is to balance the ball on top of the cube. This means that the cube is our agent which will take different actions.

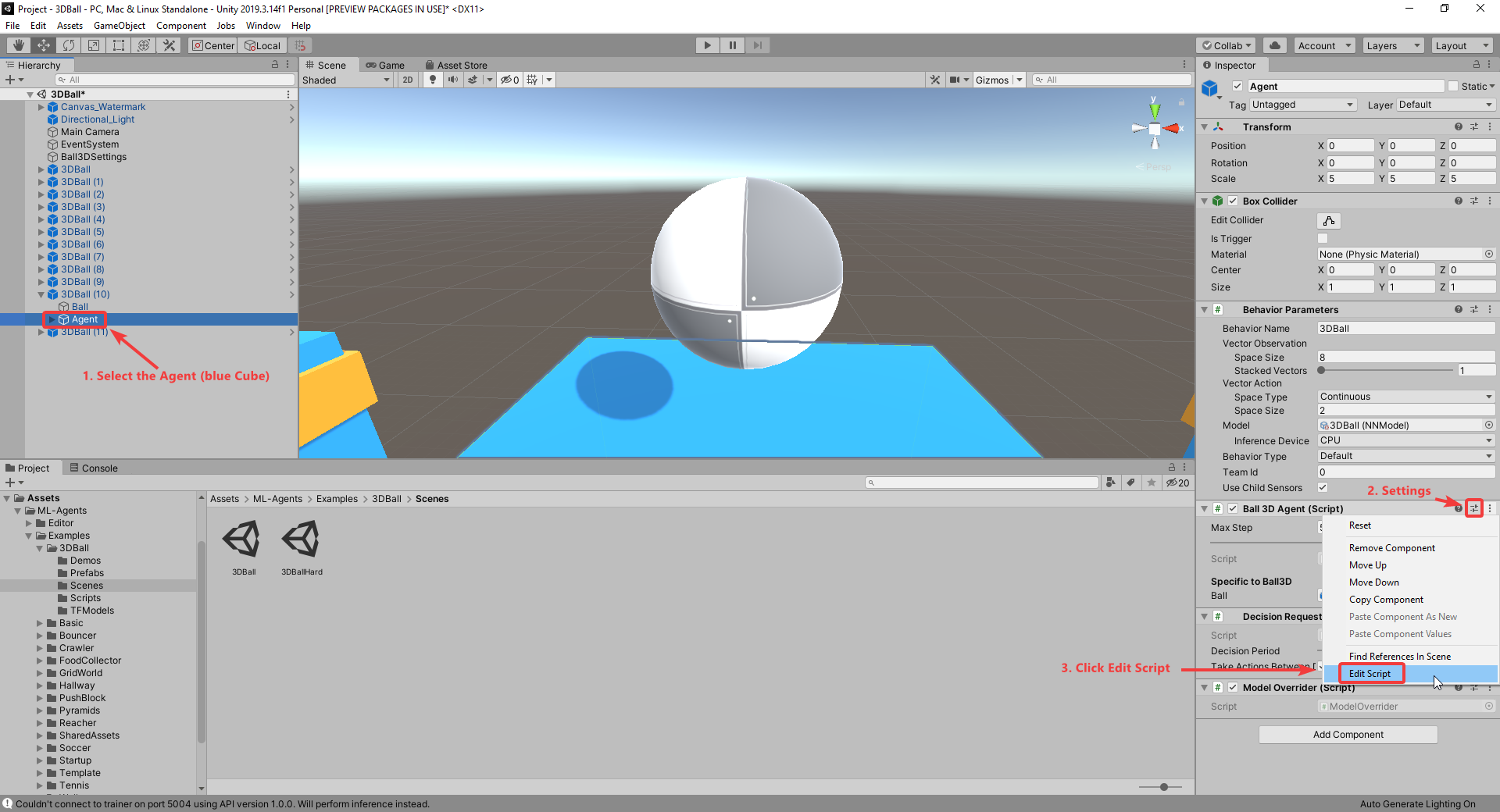

Let's see how the script for our agent looks like by selecting the agent and going to Edit Script in our Inspector view.

This will open the Script in our favorite editor. This Script extends the Agent Base class where a couple of methods are implemented from:

public override void Initialize() {}

// The agent sends information to the "Brain" which makes a decision on which action to take

// 3DBall: collect cube x and z rotation; calculate delta ball to cube; ball velocity

public override void CollectObservations(VectorSensor sensor) {}

// Execute the action and return the reward received

// 3DBall: in case we fall, return big negative reward and EndEpisode()

public override void OnActionReceived(float[] vectorAction) {}

// Called at the beginning of each episode to set-up the environment for a new episode

// E.g. Set the ball to balance randomly above the agent

public override void OnEpisodeBegin() {}

// Control the Agent using the keyboard

// Horizontal = Left & Right arrow; Vertical = Up & Down arrow

public override void Heuristic(float[] actionsOut) {}

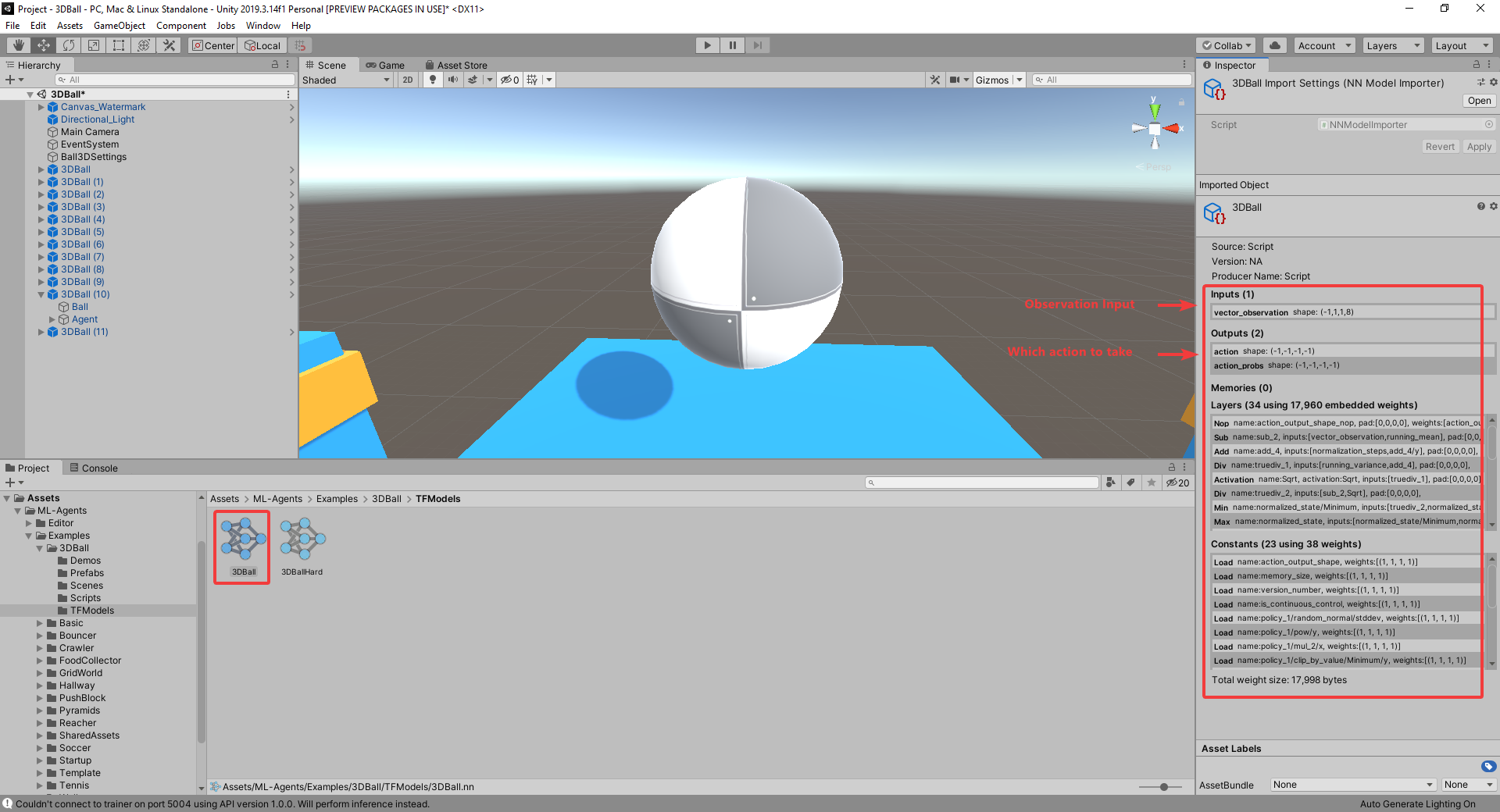

Important to know is that the agent will train a Neural Network based on the observations collected in the CollectObservations method through our Reinforcement Learning implementation. This Neural Network will then output action probabilities on which action we should take, whereafter the environment will state which reward is related to the action we took.

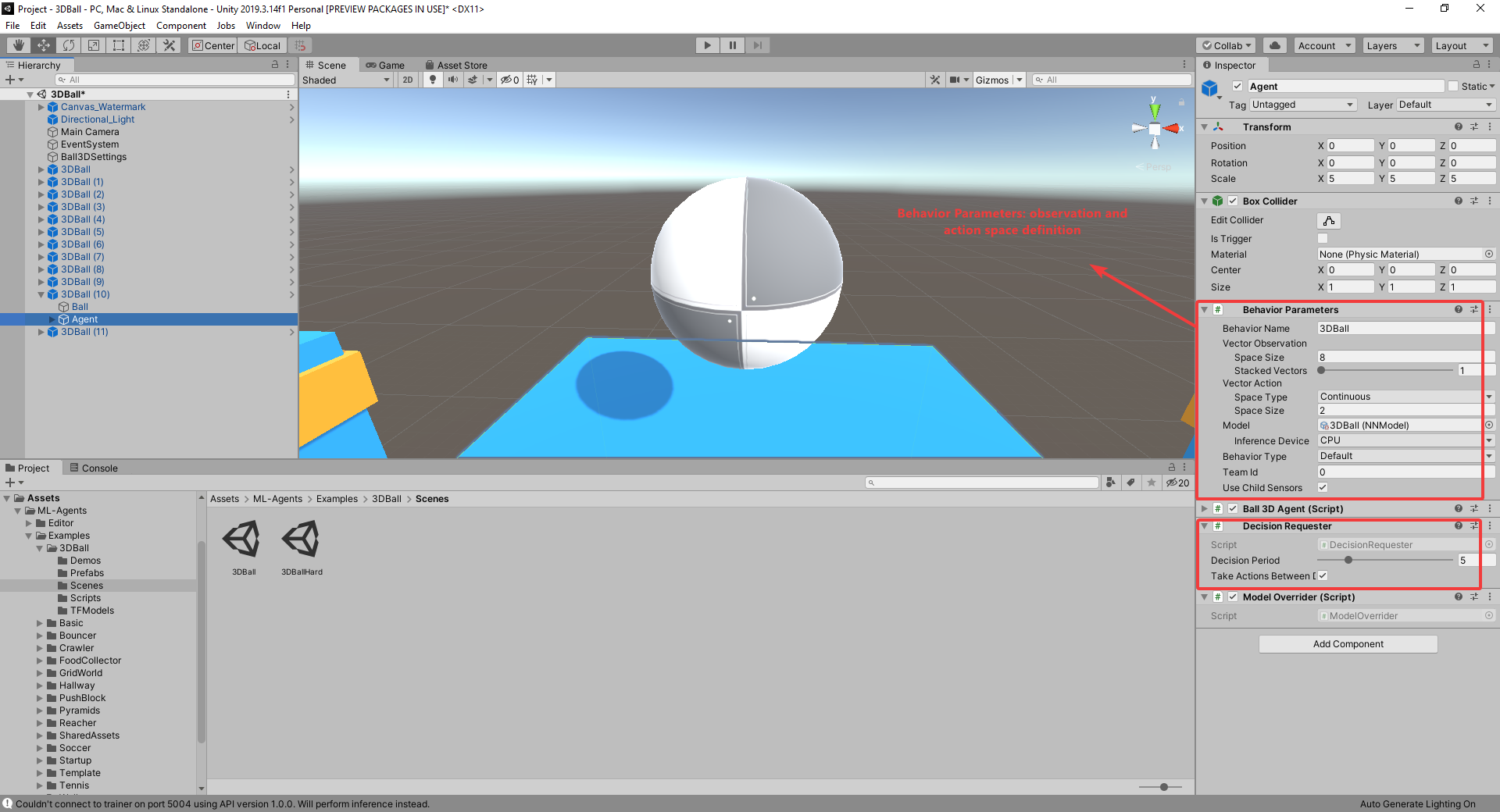

In the picture above we see 2 important things that should be configured correctly:

- Behavior Parameters: Added automatically through our Agent (see Ball 3D Agent Script inheriting from

Agent) - Vector Observation

- Space Size: How many observations do we get?

- Stacked Vectors: How big do we want the history size to be? (e.g. 5 will return 5 * Space Size observations)

- Vector Action Space

- Type: Discrete (Specific action in a set) OR Continuous (number between -1 and +1)

- When we pick Continuous, we need to define the Space Size, which is amount of actions between -1 and +1 to take.

- Decision Requester: A script that tells the agent to make a decision every X steps.

- It observes teh environment every X steps of the total amount of steps as defined in the Agent Script and takes an action every step

- This is because you take a certain action longer then 1 step (e.g. moving forward)

- Note: Without this, teh agent will sit there doing nothing.

Conclusion

ML-Agents is an interesting bet from Unity! In my personal opinion it's however a very smart one, seeing that they own what is crucial for Reinforcement Learning: The Simulation Environment. By developing this as a product, they will be able to push themselves outside the space of the gaming industry alone and into new spaces such as Manufacturing.

Correlating this with other announcements their VP made where they are adding simulator capabilities for prototyping industrial designs, I'm quite certain this is something they are interested in.

They for sure got my interest, and I can't wait to see what they come up with next! Something I'd love to see from them is a cloud platform that integrates with it nicely.

Finally, one question remains in my head: "Where does Unity see themselves? Is it in the space of simulation and integration with Reinforcement Learning specialists, or do they want to be doing the End-to-End implementations?" If the first one is the case, I can't wait to see them interact with frameworks such as Facebook ReAgent or Bonsai and their Inkling code.

What do you think? Let me know in the comments below, or through my LinkedIn / Twitter channels.

Comments ()