Key Takeaways from NVIDIA's GTC 2024: The Future of Autonomous AI and Robotics

Dive into the highlights from NVIDIA's GTC 2024, exploring breakthroughs in autonomous AI, robotics, simulation technologies, and the evolving landscape of reinforcement learning. Discover how these advancements are shaping the future of technology.

I just had the chance to visit NVIDIA's GTC 2024 edition, and damn, was I impressed! It showed a future, where Autonomous AI is leading and validates what we already know, Autonomous Agents are there to stay!

Autonomous Agents are there to stay!

Of course, this is a subjective post, because as CTO at Composabl, it's my mission to find the latest technology and define a strategy for our Company that makes us stand-out in the technology world. Thus, I was mainly interested in figuring out what:

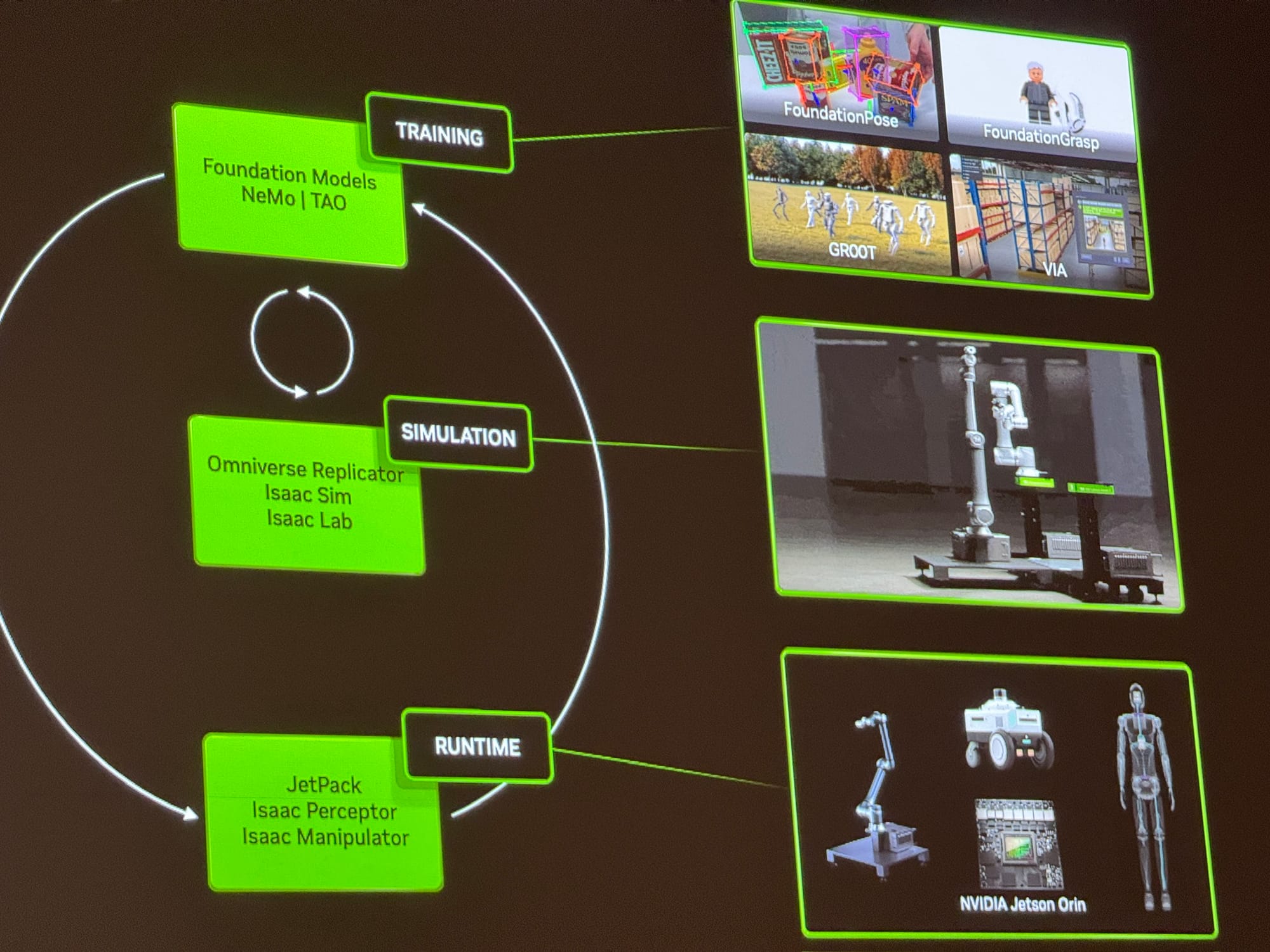

- Nvidia is doing in the Robotics Space

- How simulation is changing and where we should invest in

- Crossing the Sim2Real gap

- What customers require in an edge runtime

NVIDIA's GTC conference went in on all of that! They solved and most often even demonstrated solutions to most of the questions I had in my mind and allowed an updated strategy to form.

As Composabl, we are using simulation software to train our different Composed AI Agents (through the SDK - soon through our UI) and eventually run them in the different form factors our customer require. Looking at GTC, they are mainly aligned with that vision (with the difference that our USP is the Agent creation through our Machine Teaching paradigm, making it easy for anyone to create an Agent)

As Composabl, we put Autonomous Agent creation into the hands of everyone!

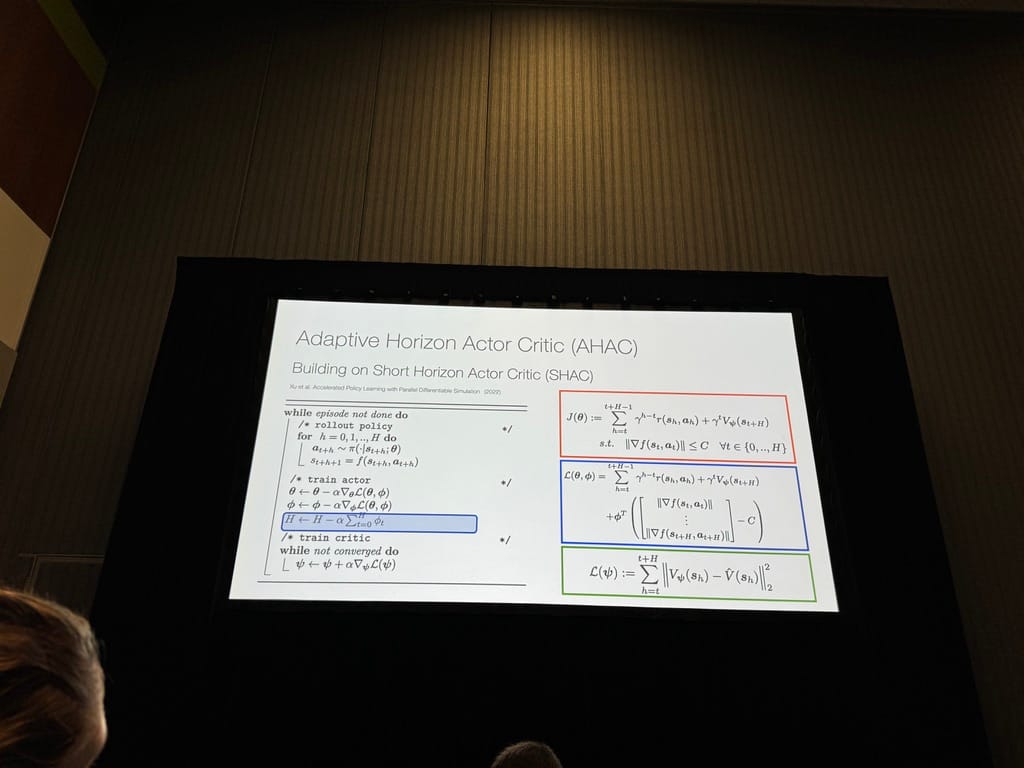

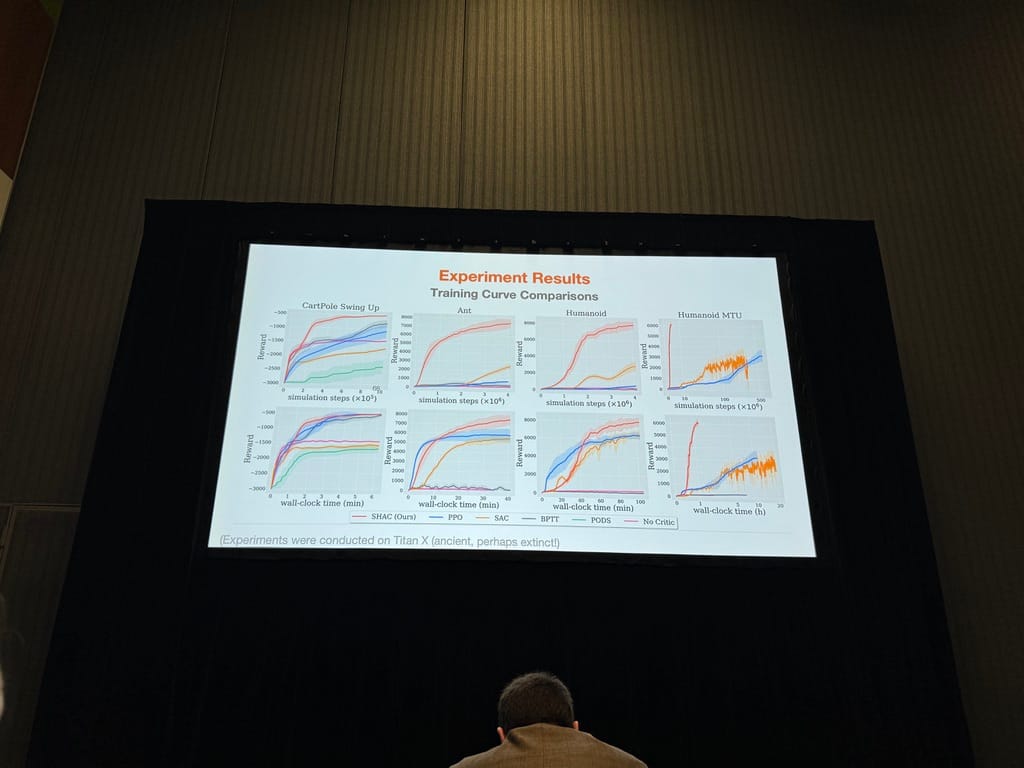

What NVIDIA also showed, is how Research is not standing still. Reinforcement Learning can be quite "slow" unless you know how to search through the Observation Space correctly (which is what we ultimately improve through Machine Teaching). The most used algorithm for that currently is PPO, as it typically works in a variety of use cases and simulators. For this, they devised a new method named Short-Horizon Actor-Critic (SHAC) and Adaptive Horizon Actor Critic (AHAC) that combines Gradient-based Optimization with RL. Providing an amazing performance gain under certain circumstances:

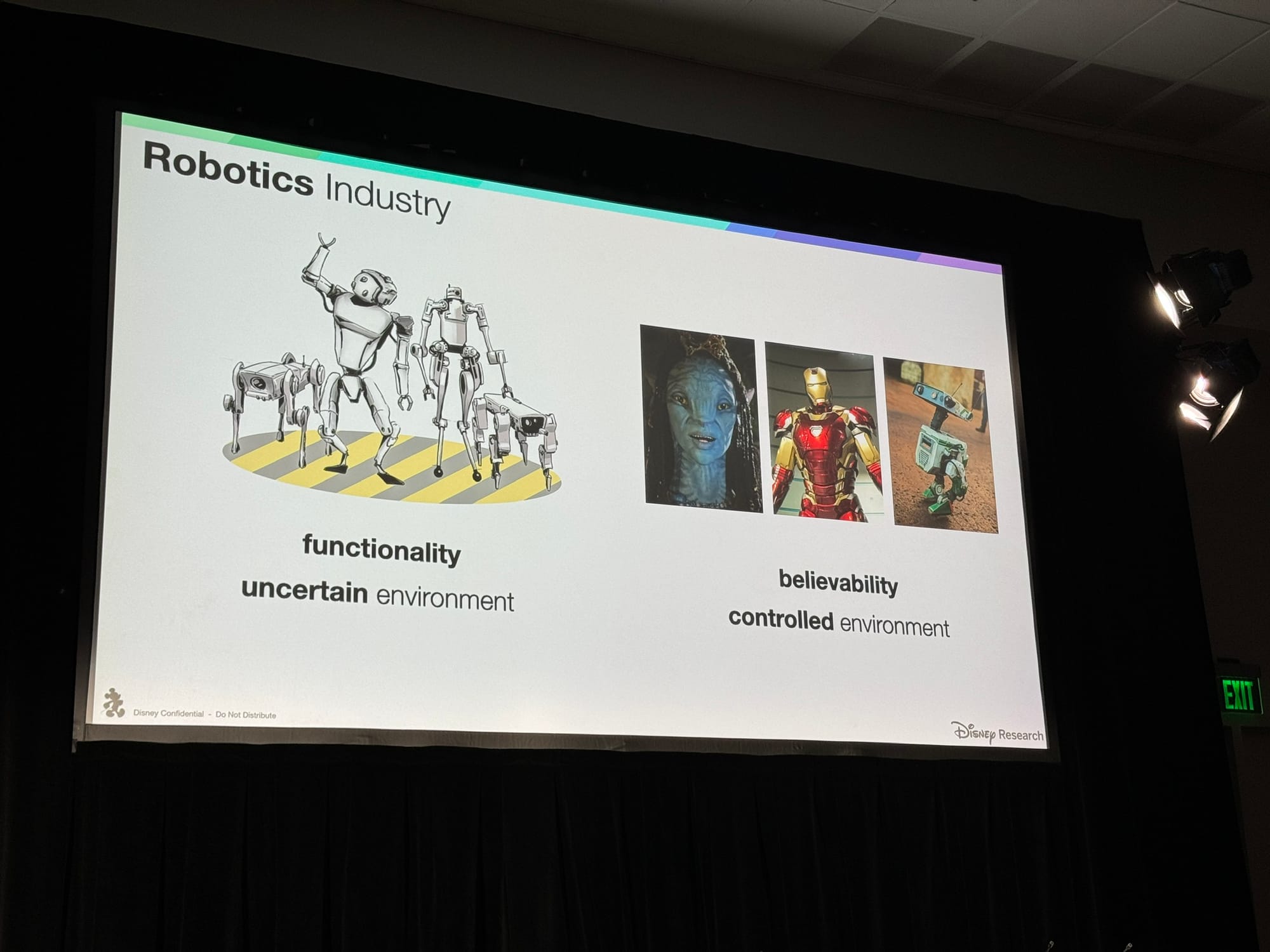

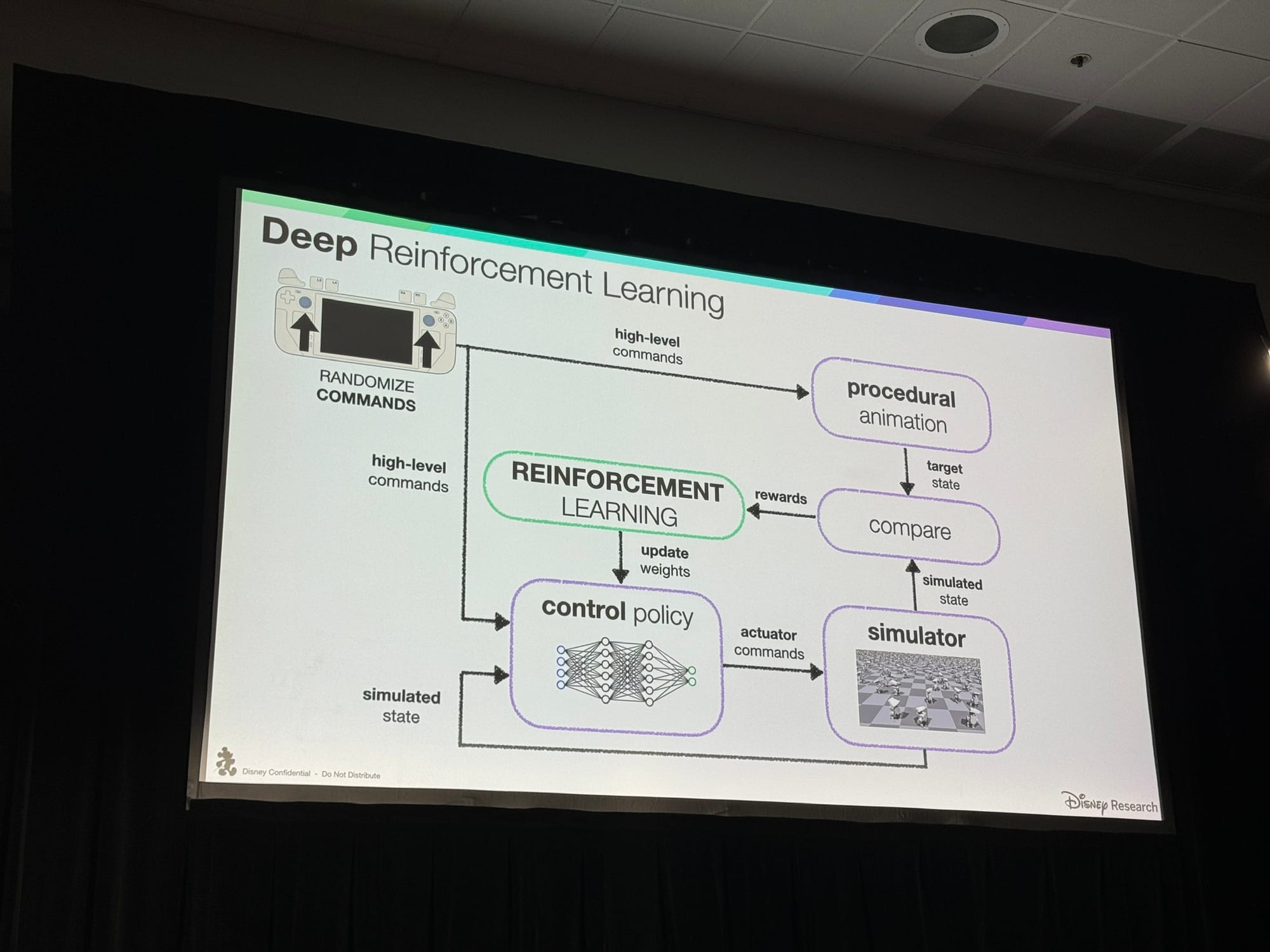

Now, the most interesting session for me personally was - by far - Disney's session of how they are bringing Personality to their robots. Demonstrated through how they made BD-1 walking. While this might seem as "Gimmick", it's ultimately about what Disney actually achieved here (but are still quite secretive about it in the knowledge sharing domain).

By combining Reinforcement Learning, with pre-programmed animation sequences, they are able to create a stable bi-pedal walking robot that acts as naturally as the character they invented would.

The biggest learning, however comes from that they accomplish this by comparing the Animation state with the Simulator state, letting the Reinforcement Learning algorithm figure out what to do correctly. Finally, creating an amazing result! But see for yourself:

In conclusion, the biggest take aways for me were:

- Simulation will evolve tremendously over the next couple of years! but be aware, as Isaac Sim is ultimately a lock-in. NVIDIA is moving all the processing to the GPU (as its their bread and butter).

- Reinforcement Learning is amazing, but has caveats. As Composabl, we know this and work around it, but a lot of research is still being done

- In Robotics it will all be around bringing "personality" to your robot, moving away from the classical static robots towards more life-breathing ones.

Comments ()