Mounting Azure Files on AKS (Kubernetes)

A common scenario is to have a shared storage that all your pods can access and write to, but how can we accomplish this in Kubernetes?

Prerequisites

A Kubernetes cluster accessible through kubectl

Getting Started

Azure has a few storage solutions we can use for this: Files or Disks. In my case I am going for files. Now for SSD vs HDD I typically pick SSDs, but HDD often does the trick as well (although make sure to check the write I/O bottleneck here)

When reading the above link, we see that by default Azure uses "Premium SSD". Again, check the IO you need and ensure it is according to the use case (as the price differs quite a lot), check the below for more information

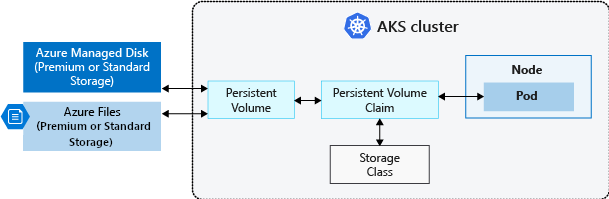

Kubernetes, PV and PVCs

How Kubernetes works with volumes is that it "has" a volume (PV = Persistent Volume) and it requests a Claim on it to write to it (i.e., how much can we write to it). Azure their documentation explains this quite well:

In this case we are using a custom Storage Class by Azure (CSI - Container Storage Interface) that manages the lifecycle of our volume. More specifically the Azure Files CSI.

So how do we now create this? Well it's super simple. We just create 2 small manifests and allow everything to be mounted and that's it!

Creating the Custom File Storage Class

Azure out of the box creates a storage class, but I like to use my own for full control. It will provide all rights on the entire volume and dynamically expand

# Implement a custom Azure File Storage Class

# this allows us to fine tune the mount options and disk type

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: csi-azurefile-custom

provisioner: file.csi.azure.com

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

mountOptions:

- dir_mode=0777

- file_mode=0777

- uid=0

- gid=0

- mfsymlinks

- cache=strict # https://linux.die.net/man/8/mount.cifs

- nosharesock

parameters:

skuName: Standard_LRSCreating the PVC

Once that is set, we just create a claim that uses this and request 100GB

# Create a PVC for Azure File CSI driver

# we can then mount this (e.g., https://raw.githubusercontent.com/kubernetes-sigs/azurefile-csi-driver/master/deploy/example/nginx-pod-azurefile.yaml)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-azure-file

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Gi

storageClassName: csi-azurefile-custom

Mounting

Finally, we mount the PVC into our pod:

---

kind: Pod

apiVersion: v1

metadata:

name: demo-nging

spec:

containers:

- image: mcr.microsoft.com/oss/nginx/nginx:1.19.5

name: c-nginx

command:

- "/bin/bash"

- "-c"

- set -euo pipefail; while true; do echo $(date) >> /mnt/azurefile/outfile; sleep 1; done

volumeMounts:

- name: persistent-storage

mountPath: "/mnt/azurefile"

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: pvc-azure-fileConclusion

When we now run our pod and write to the disk, we will see data appearing!

Comments ()